- #SPACY PART OF SPEECH TAGGER HOW TO#

- #SPACY PART OF SPEECH TAGGER CODE#

- #SPACY PART OF SPEECH TAGGER DOWNLOAD#

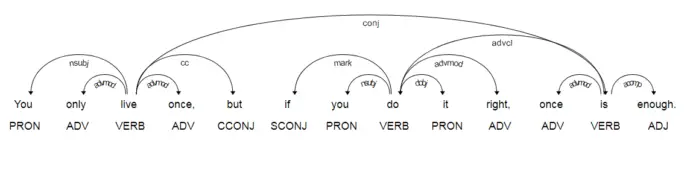

To filter the stopwords just type: text_without_stopwords = ĭoc = nlp(' '.join(text_without_stopwords)) You guessed, it's also already done for you by nlp instance. Removing stopwords - stopwords are the words that are not bringing any new information or meaning to the sentence and can be omitted. You can get the lemma of every word by: for token in doc: In SpaCy, it's also already done for you by putting the text into nlp instance. Morphological processing: lemmatization - it's a process of transforming the words into a linguistically valid base form, called the lemma: nouns → singular nominative formĪdjectives → singular, nominative, masculine, indefinitive, positive form You can get the tags with: for token in doc: In SpaCy, this is already done by putting the text into nlp instance. Where the uppercase codes after the slash are a standard word tags. Language/NN and/CC assigns/VBZ parts/NNS of/IN speech/NN to/TOĮach/DT word/NN ,/, such/JJ as/IN noun/NN ,/, verb/NN ,/, Software/NN that/WDT reads/VBZ text/NN in/IN some/DT For example: A/DT Part-Of-Speech/NNP Tagger/NNP is/VBZ a/DT piece/NN of/IN It is the process of marking up a word in a text as corresponding to a particular part of speech. POS tagging - short for Part-Of-Speech tagging. # remove punctuation tokens that are in the word string like 'bye!' -> 'bye' To remove punctuation, just type: import re Punctuation removal - pretty self explanatory process, done by the method in the previous step. You can read more about the document instance here Where text is your dataset corpus or a sample from a dataset. In SpaCy you can do something like: nlp_doc = nlp(text) there's would be treated as a single word but it's actually two words there and is). It's not enough to just do text.split() (ex.

Tokenization - this is the process of splitting the text into words.

#SPACY PART OF SPEECH TAGGER DOWNLOAD#

In your command line just type python -m spacy download en and then import it to the preprocessing script like this: import spacy When you know a language you have to download a correct models from SpaCY. Language detection (self explanatory, if you're working with some dataset, you know what the language is and you can adapt your pipeline to that). So, you have to take a look at the SpaCY API documentation.īasic steps in any NLP pipeline are the following: You can do most of that with SpaCY and some regexes. A quick google search yields nothing and even the, very neat, api documentation does not seem to help.

#SPACY PART OF SPEECH TAGGER HOW TO#

I can't seem find the documentation that demonstrates what exactly these models are doing, or how to configure it. Questions: Does the above seem like a sound strategy? If no, what's missing? If yes, how much of this already happening under the hood by using the pre-trained model loaded in nlp = spacy.load('en_core_web_lg')? 4) Possibly use Doc2Vec outside of spaCy. Solution strategy: Following the advice in this post, I would like to do the following (using spaCy where possible): 1) Remove stop words. Now, this may not come a major surprise given the lack of preprocessing. This is more or less independent of the baseline. Problem: Practically all similarities comes out as > 0.95. I simply apply the nlp model on the entire corpus of text, and compare it to all others. Tddata.loc = str(json_text)Īpproach: So far, I have only used the spaCy package to do "out of the box" similarity. Json_text = json_normalize(json.load(json_file)) With open(os.path.join(path_to_json, js)) as json_file:

#SPACY PART OF SPEECH TAGGER CODE#

For all the lazy onesĪnd here is a snippet of code to put it in a df: import os, jsonįrom pandas.io.json import json_normalize I am quite new to NLP.ĭata: The data I am using is available here. what they are talking about) to do clustering and recommendation. This usually happens under the hood when the nlp object is called on a textĪnd all pipeline components are applied to the Doc in order.One sentence backdrop: I have text data from auto-transcribed talks, and I want to compare their similarity of their content (e.g. The document is modified in place, and returned. Defaults to Scorer.score_token_attr for the attribute "tag". Whether existing annotation is overwritten. Used to add entries to the losses during training. The output vectors should match the number of tags in size, and be normalized as probabilities (all scores between 0 and 1, with the rows summing to 1). Shortcut for this and instantiate the component using its string name andĪ model instance that predicts the tag probabilities. In your application, you would normally use a

0 kommentar(er)

0 kommentar(er)